Dora Do [engineering / design]

doradocodes@gmail.com

Ambient Watercolor (2022)

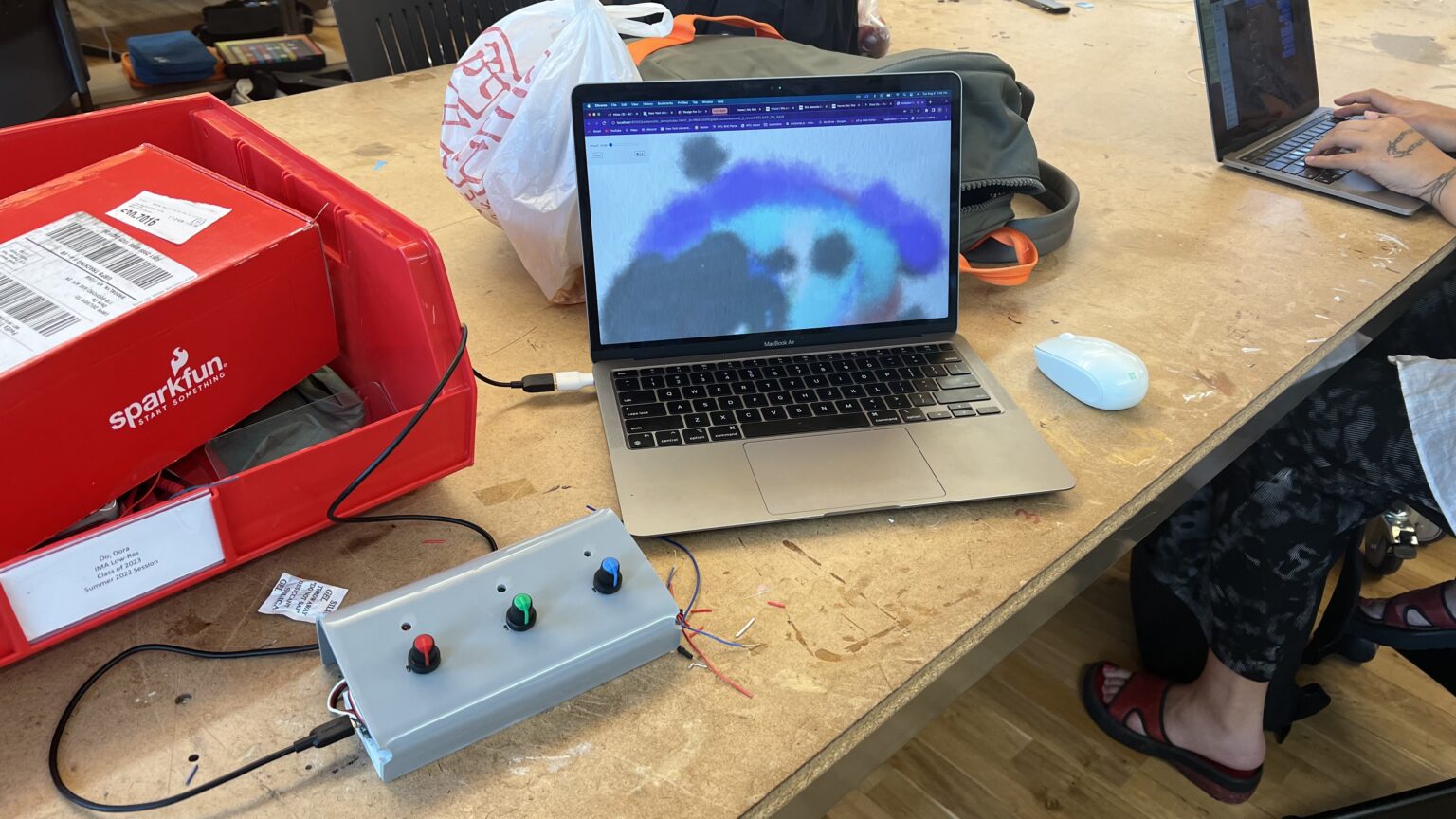

Ambient Watercolor is an experiment with ML5 and generative art. In a calm, tranquil environment, participants can create abstract watercolor paintings using their hands.

Table of contents

Background

The journey to the creation of my final project of the Summer '22 term began at the beginning of the summer session. I already had previous coding experience, so my Creative Coding instructor, Carrie, told me to follow my own curriculum. She encouraged me to experiment with anything I have been previously curious about, so I told her I wanted to learn and experiment more with 3D web graphics (Three.js) and the idea of art installations. At the time, some ideas I had about my final project included visualizing music and placing in an art installation.

During the first week of classes, I followed some ThreeJS tutorials which taught me the basics of creating objects, manipulating cameras, and shading. For the first creative coding assignment, “Opposites”, I created a 3D object with alternated turning into a sun or moon.

While I was experimenting with Three.js in Creative Coding, I was also participating in group exercises in Interface Lab. For one of the exercises, we were tasked with analyzing an existing technological interface. My group chose something simple–the mouse and keyboard. The mouse and keyboard has been so heavily integrating into our society that we have overlooked all the issues that come with it. Mainly, how it can cause pain for a lot of people after long term use. This, along with playing with the OrbitControls camera in Three.js, I began to toy with the idea of being able to manipulate objects on the screen with just your hand gestures.

To do so, I knew that the webcam would be the easiest and most assessable way to recognize and track hand gestures. Carrie had suggested I looked into ML5, a machine learning javascript library, to recognize hand gestures through my webcam. I eventually went down a rabbit hole, trying to train my own model to recognize the specific gestures I wanted since prebuilt models, like PoseNet, did not do exactly what I wanted. In the interest of time, I abandoned my attempt to train my own model and instead modified the design of my intended user interaction to fit with PoseNet/HandPose.

After being able to import the HandPose library, I modified my original “Opposites” assignment to be a globe. The idea was to be able to rotate the globe horizontally and vertically by moving your hand to the right or left, and to control zoom with two hands (opened to zoom out, closed to zoom in).

While the rotation effect was cool, the purpose of the project felt aimless, and I felt stumped with what to do with it. Putting this idea on hold, I began researching about other topics that I was interested in, which included generative art. I had seen a tutorial about simulating watercolor digitally and using the concept of recursion to form the realistic randomness of analog art. I prototyped the idea in P5 and began to think of ways of bringing the ideas from the tutorial into animation. With animation, I thought of ways that would allow users to interact with generative watercolor art.

We began learning about serial input and output in Interface Lab, and I like the idea of having a physical controller to interact with the watercolor sketch. The original idea for the interaction was to use potentiometers to control the paintbrush. However, after feedback from David, the use of potentiometers can be a cumbersome user experience, and I began to brainstorm different way of interaction.

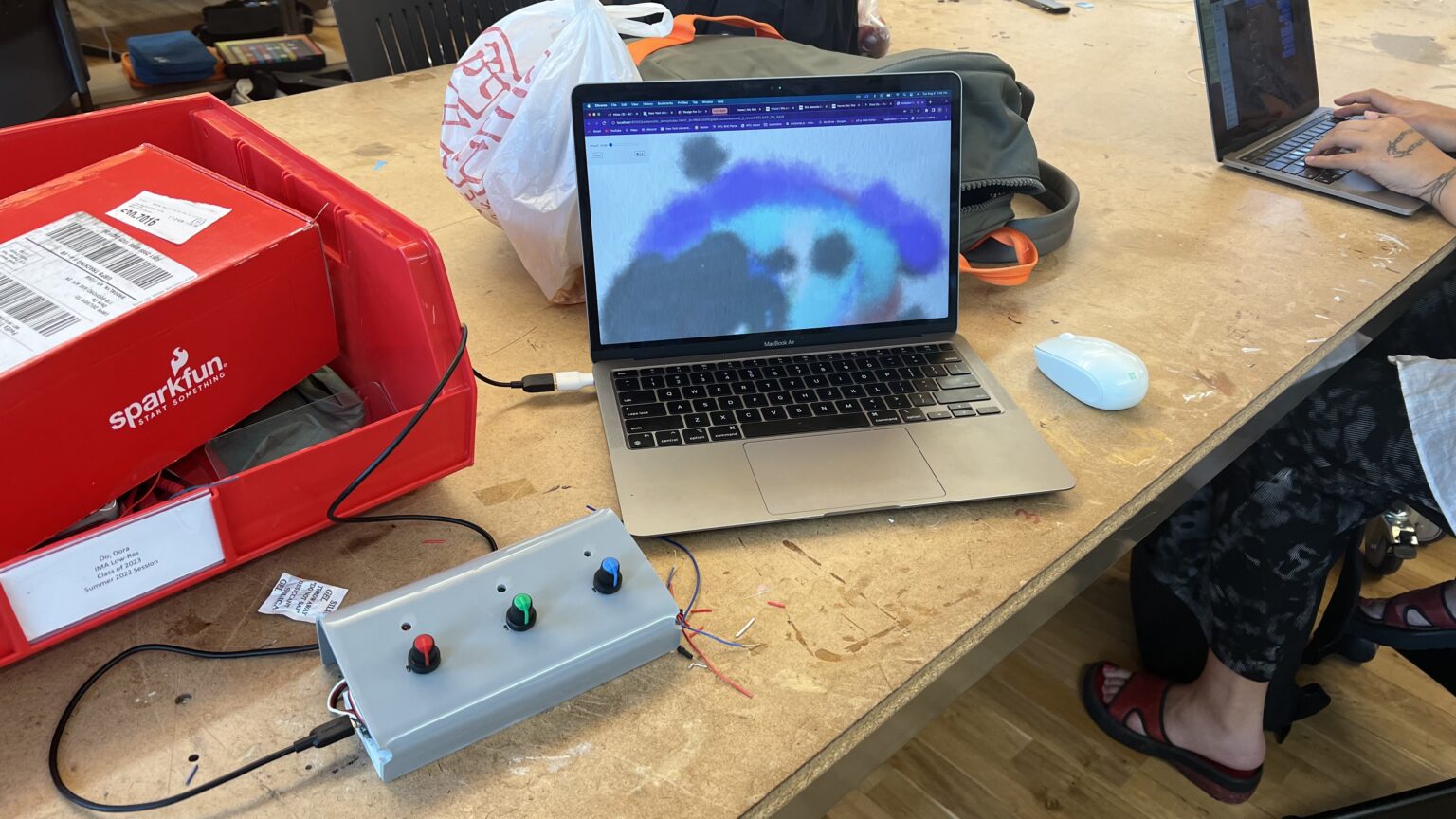

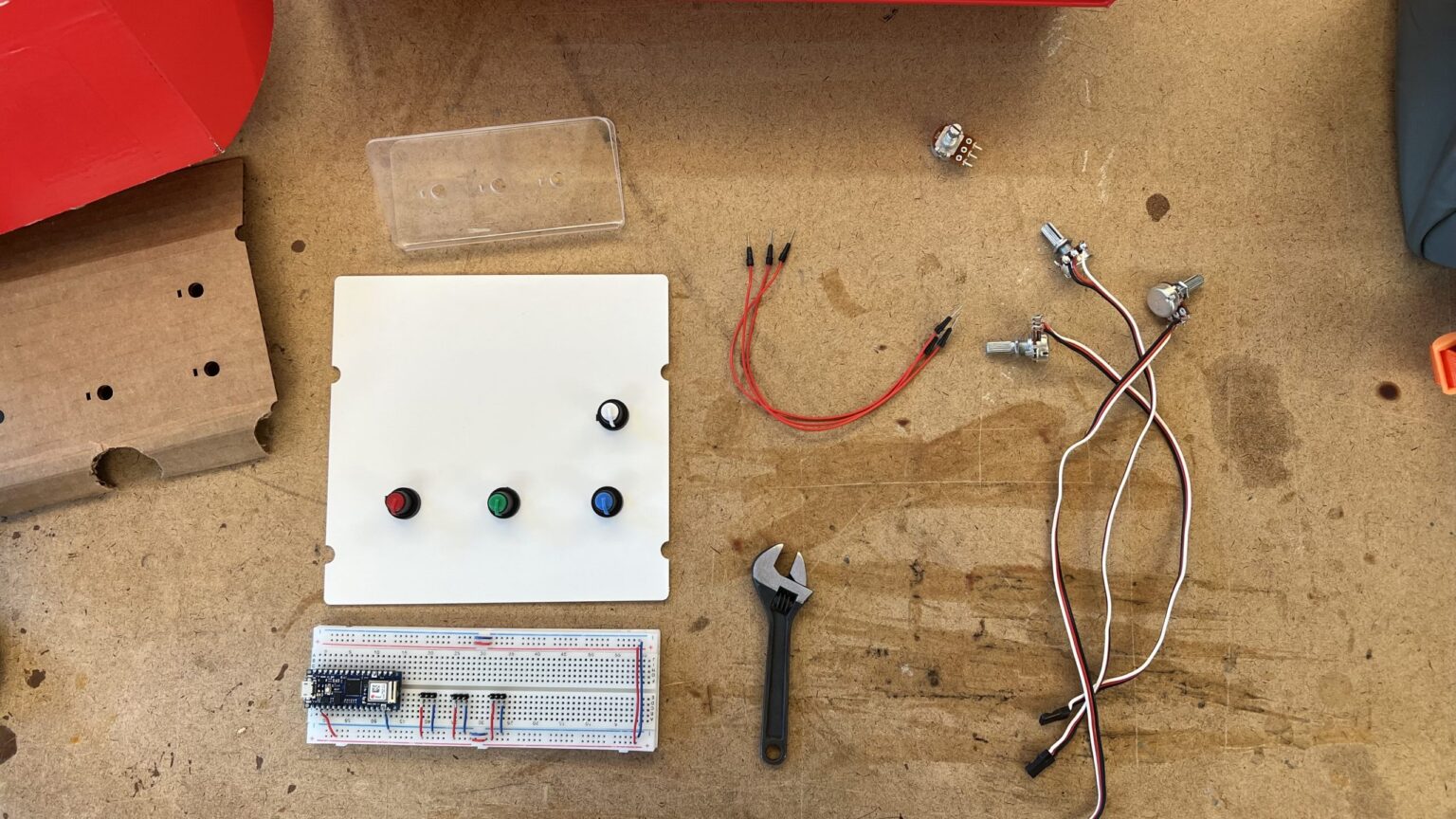

With my learnings from both ml5 and generative art, I ultimately decided to build my final project as a combination of the two. The goal was to give users a calming and tranquil experience playing with digital watercolor. I wanted to allow users to paint with the watercolor brush using hand gestures, but be able to control the brush color with a physical control panel. I wanted to have three potentiometers to represent the red, green, and blue values of the brush’s color.

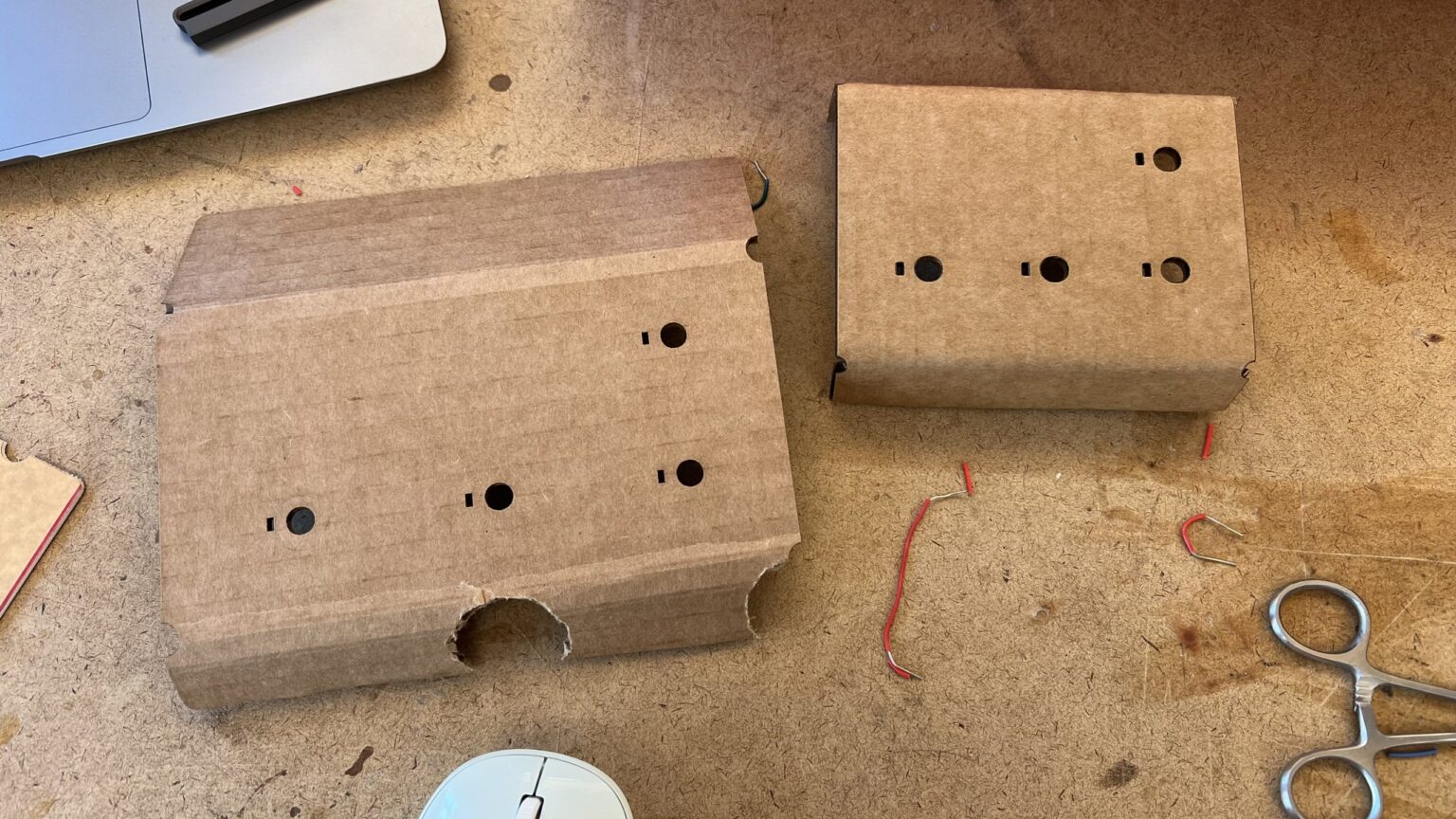

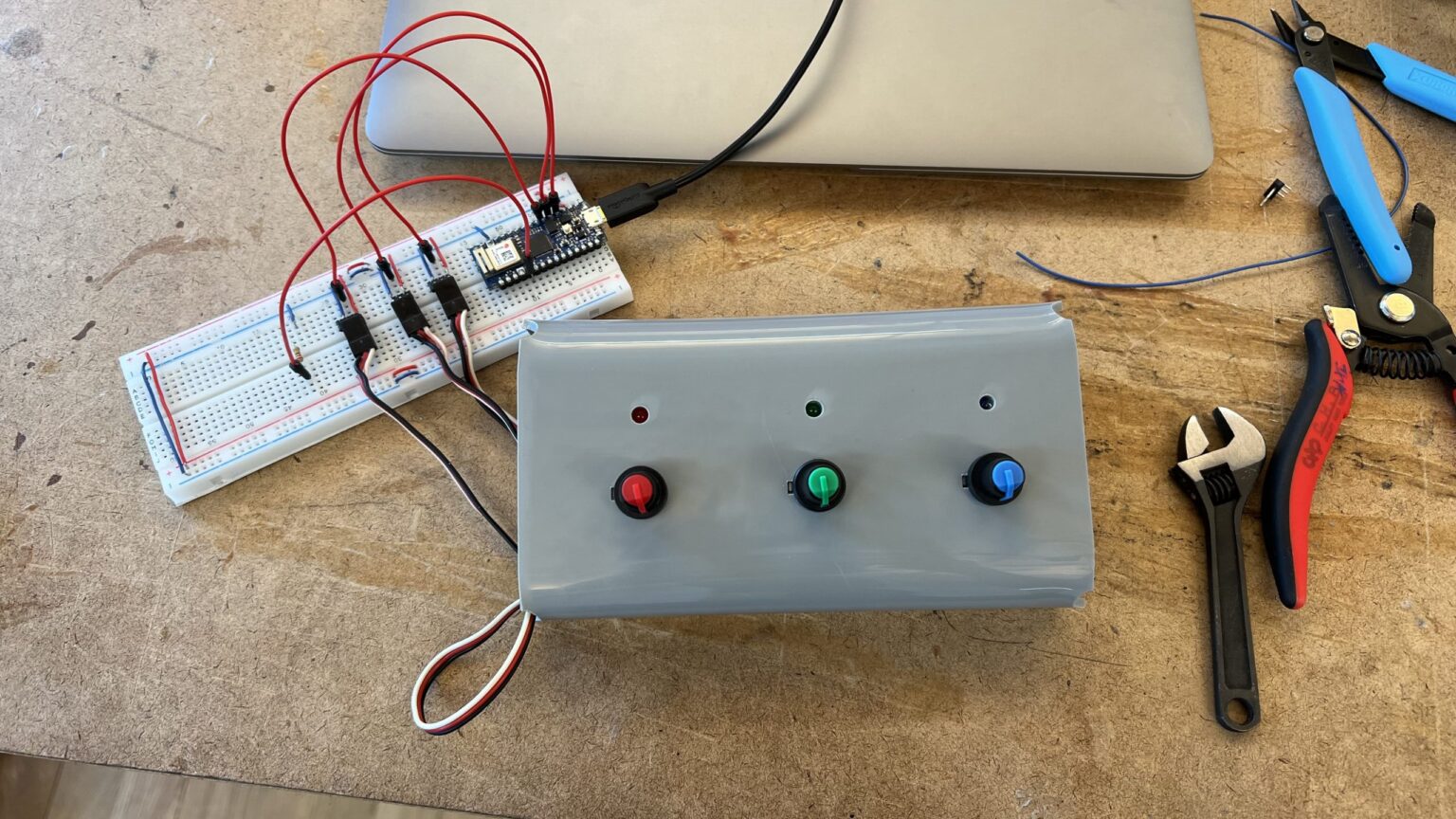

I began building the control panel by laser cutting the design on cardboard. I wanted to see if the board would stand up at an angle, so that the slanted surface will feel comfortable for the user. After seeing how it would stand with cardboard, I cut a piece of acrylic, and began wiring the breadboard to hook up the potentiometers. While I was adding the potentiometers, my classmate, Ai, suggested that I added LEDs to bring more visuals to the panel. Excited about the idea, I cut another piece of acrylic with holes for the LEDs.

This is the final result of the control panel (without the LEDs wired up). Ultimately I was satisfied with the simple, color coordinated user interface. Wiring the LEDs was the tricky part, because soldering wires to connect the LEDs that were superglued underneath the panel was difficult.

As a final piece to this project, I added sound to put the user in the desired calm and tranquil headspace. I recorded some ambient sound notes on Garageband and added the sound every time a new brush stroke started on the screen.

Here are some examples of user testing the project: